Turing Complete User

“Any error may vitiate the entire output of the

device. For the recognition and correction of such malfunctions

intelligent human intervention will in general be necessary.”

— John von Neumann, First Draft of a Report on the EDVAC, 1945

“If you can’t blog, tweet! If you can’t tweet, like!”

— Kim Dotcom, Mr. President, 2012

Invisible and Very Busy

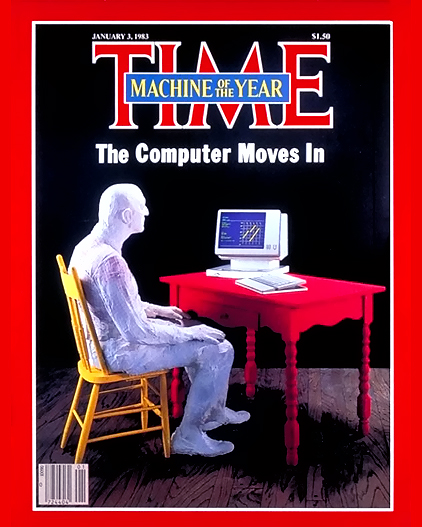

Computers are getting invisible. They shrink and hide. They lurk under the skin and dissolve in the cloud. We observe the process like an eclipse of the sun, partly scared, partly overwhelmed. We divide into camps and fight about advantages and dangers of The Ubiquitous. But whatever side we take — we do acknowledge the significance of the moment.

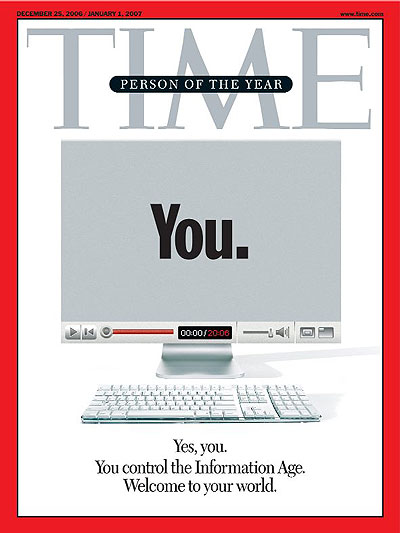

With the disappearance of the computer, something else is silently becoming invisible as well — the User. Users are disappearing as both phenomena and term, and this development is either unnoticed or accepted as progress — an evolutionary step.

The notion of the Invisible User is pushed by influential user interface designers, specifically by Don Norman a guru of user friendly design and long time advocate of invisible computing. He can be actually called the father of Invisible Computing.

Those who study interaction design read his “Why Interfaces Don’t Work” published in 1990 in which he asked and answered his own question: “The real problem with the interface is that it is an interface”. What’s to be done? “We need to aid the task, not the interface to the task. The computer of the future should be invisible!”1

It took almost two decades, but the future arrived around five years ago, when clicking mouse buttons ceased to be our main input method and touch and multi-touch technologies hinted at our new emancipation from hardware. The cosiness of iProducts, as well as breakthroughs in Augmented Reality (it got mobile), rise of wearables, maturing of all sorts of tracking (motion, face) and the advancement of projection technologies erased the visible border between input and output devices. These developments began to turn our interactions with computers into pre-computer actions or, as interface designers prefer to say, “natural” gestures and movements.

Of course computers are still distinguishable and locatable, but they are no longer something you sit in front of. The forecasts for invisibility are so optimistic that in 2012 Apple allowed to themselves to rephrase Norman’s predictive statement by putting it in the present tense and binding it to a particular piece of consumer electronics:

We believe that technology is at its very best when it is invisible, when you are conscious only of what you are doing, not the device you are doing it with […] iPad is the perfect expression of that idea, it’s just this magical pane of glass that can become anything you want it to be. It’s a more personal experience with technology than people have ever had.2

In this last sentence, the word “experience” is not an accident, neither is the word “people”.

Invisible computers, or more accurately the illusion of the computerless, is destroyed if we continue to talk about “user interfaces”. This is why Interface Design starts to rename itself to Experience Design — whose primary goal is to make users forget that computers and interfaces exist. With Experience Design there is only you and your emotions to feel, goals to achieve, tasks to complete.

The field is abbreviated as UXD, where X is for eXperience and U is still for the Users. Wikipedia says Don Norman coined the term UX in 1995. However, in 2012 UX designers avoid to use the U-word in papers and conference announcements, in order not to remind themselves about all those clumsy buttons and input devices of the past. Users were for the interfaces. Experiences, they are for the PEOPLE!3

In 2008 Don Norman simply ceased to address Users as Users. At an event sponsored by Adaptive Path, a user interface design company, Norman stated “One of the horrible words we use is users. I am on a crusade to get rid of the word ‘users’. I would prefer to call them ‘people.’”4 After enjoying the effect of his words on the audience he added with a charming smile, “We design for people, we don’t design for users.”

A noble goal in deed, but only when perceived in the narrow context of Interface Design. Here, the use of the term “people” emphasizes the need to follow the user centered in opposition to an implementation centered paradigm. The use of “people” in this context is a good way to remind software developers that the User is a human being and needs to be taken into account in design and validation processes.

But when you read it in a broader context, the denial of the word “user” in favor of “people” becomes dangerous. Being a User is the last reminder that there is, whether visible or not, a computer, a programmed system you use.

In 2011 new media theoretician Lev Manovich also became unhappy about the word “user”. He writes on his blog “For example, how do we call a person who is interacting with digital media? User? No good.”5

Well, I can agree that with all the great things we can do with new media — various modes of initiation and participation, multiple roles we can fill — that it is a pity to narrow it down to “users”, but this is what it is. Bloggers, artists, podcasters and even trolls are still users of systems they didn’t program. So they (we) are all the users.

We need to take care of this word because addressing people and not users hides the existence of two classes of people — developers and users. And if we lose this distinction, users may lose their rights and the opportunity to protect them. These rights are to demand better software, the ability “to choose none of the above”6, to delete your files, to get your files back, to fail epically and, back to the fundamental one, to see the computer.

In other words: the Invisible User is more of an issue than an Invisible Computer.

What can be done to protect the term, the notion and the existence of the Users? What counter arguments can I find to stop Norman’s crusade and dispel Manovich’s skepticism? What do we know about a user, apart from the opinion that it is “no good” to be one?

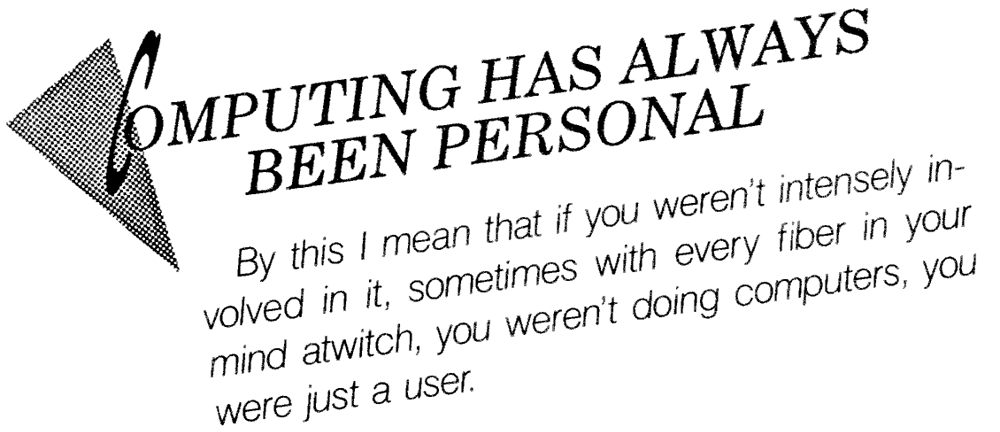

We know that it was not always like this. Before Real Users (those who pay money to use the system) became “users”, programmers and hackers proudly used this word to describe themselves. In their view, the user was the best role one could take in relation to their computer.7

Furthermore, it is wrong to think that first there were computers and developers and only later users entered the scene. In fact, it was the opposite. At the dawn of personal computer the user was the center of attention. The user did not develop in parallel with the computer, but prior to it. Think about Vanevar Bush’s “As we May Think” (1945), one of the most influential texts in computer culture. Bush spends more words describing the person who would use the Memex than the Memex itself. He described a scientists of the future, a superman. He, the user of the Memex, not the Memex, itself was heading the article.8

20 years later, Douglas Engelbart, inventor of the pioneering personal computer system NLS, as well as hypertext, and the mouse, talked about his research on the augmentation of human intellect as “bootstraping” — meaning that human beings, and their brains and bodies, will evolve along with new technology. This is how French sociologist Thierry Bardini describes this approach in his book about Douglas Engelbart: “Engelbart wasn’t interested in just building the personal computer. He was interested in building the person who could use the computer to manage increasing complexity efficiently.”9

And let’s not forget the title of J.C.R. Licklider’s famous text, the one that outlined the principles for APRAs Command and Control research on Real Time System, from which the interactive/personal computer developed — Man-Computer Symbiosis (1960).10

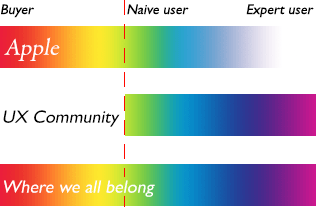

When the personal computer was getting ready to enter the market 15 years later, developers thought about who would be model users. At XEROX PARC, Alan Kay and Adele Goldberg introduced the idea of kids, artists, musicians and others as potential users for the new technology. Their paper “Personal Dynamic Media” from 197711 describes important hardware and software principles for the personal computer. But we read this text as revolutionary because it clearly establishes possible users, distinct from system developers, as essential to these dynamic technologies. Another Xerox employee, Tim Mott (aka “The father of user centered design”) brought the idea of a Secretary into the imagination of his colleagues. This image of the “Lady with the Royal Typewriter”12 predetermined the designs of XEROX Star, Apple Lisa and and further electronic offices.

So, it’s important to acknowledge that users existed prior to computers, that they were imagined and invented — Users are the figment of the imagination. As a result of their fictive construction, they continued to be re-imagined and re-invented through the 70’s, 80’s, 90’s, and the new millennium. But however reasonable, or brave, or futuristic, or primitive these models of users were, there is a constant.

Let me refer to another guru of user centered design, Alan Cooper. In 2007, when the U word was still allowed in interaction design circles, he and his colleagues shared their secret in “About Face, The Essentials of Interaction Design”:

“As an interaction designer, it’s best to imagine that users – especially — beginners — are simultaneously very intelligent and very busy.”13

It is very kind advice (and one of the most reasonable books on interface design, btw) and can be translated roughly as “hey, front end developers, don’t assume that your users are more stupid than you, they are just busy.” But it is more than this. What the second part of this quote gets to so importantly is that Users are people who are very busy with something else.

Alan Cooper is not the one who invented this paradigm, and not even Don Norman with his concentration on task rather than the tool. It originated in the 1970’s. Listing the most important computer terms of that time, Ted Nelson mentions so called “user level systems” and states that these “User-level systems, [are] systems set up for people who are not thinking about computers but about the subject or activity the computer is supposed to help them with.”14 Some pages before he claims:

15

15One should remember that Ted Nelson was always on the side of users and even “naïve users” so his bitter “just a user” means a lot.

Alienation of users from their computers started in XEROX PARC with secretaries, as well as artists and musicians. And it never stopped. Users were seen and marketed as people who’s real jobs, feelings, thoughts, interests, talents — everything what matters — lie outside of their interaction with personal computers.

For instance, in 2007, when Adobe, the software company who’s products are dominating the so called “creative industries”, introduced version 3 of Creative Suite, they filmed graphic artists, video makers and others talking about the advantages of this new software package. In particular interesting was one video of a web designer (or an actress in the role of a web designer): she enthusiastically demonstrated what her new Dream Weaver could do, and that in the end “I have more time to do what I like most — being creative”. The message from Adobe is clear. The less you think about source code, scripts, links and the web itself, the more creative you are as a web designer. What a lie. I liked to show it to fresh design students as an example of misunderstanding the core of the profession.

This video is not online anymore, but actual ads for Creative Suite 6 are not much different – they feature designers and design evangelists talking about unleashing, increasing and enriching creativity as a direct result of fewer clicks to achieve this or that effect.16

In the book “Program or be Programmed”, Douglas Rushkoff describes similar phenomena:

[…] We see actual coding as some boring chore, a working class skill like bricklaying, which may as well be outsourced to some poor nation while our kids play and even design video games. We look at developing the plots and characters for a game as the interesting part, and the programming as the rote task better offloaded to people somewhere else.17

Rushkoff states that code writing is not seen as a creative activity, but the same applies to engagement with the computer in general. It is not seen as a creative task or as “mature thought”.

In “As we may think”, while describing an ideal instrument that would augment the scientist of the future, Vanevar Bush mentions

For mature thought there is no mechanical substitute. But creative thought and essentially repetitive thought are very different things. For the latter there are, and may be, powerful mechanical aids18

Opposed to this, users, as imagined by computer scientists, software developers and usability experts are the ones who’s task is to spend as little time as possible with the computer, without wasting a single thought on it. They require a specialized, isolated app for every “repetitive thought”, and, most importantly, delegate drawing the border in between creative and repetitive, mature and primitive, real and virtual, to app designers.

There are periods in history, moments in life (and many hours a day!) where this approach makes sense, when delegation and automation are required and enjoyed. But in times when every aspect of life is computerized it is not possible to accept “busy with something else” as a norm.

So let’s look at another model of users that evolved outside and despite usability experts’ imagination.

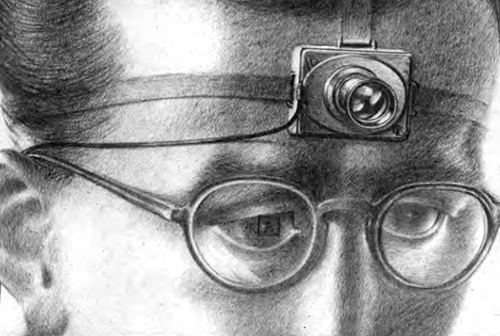

“A scientist of the Future”

Title picture of Vanevar Bush’s “As we make think”

Illustrated version from Life magazine, 1945

Russian travel blogger Sergey Dolya

photo by Mik Sazonov, 2012

General Purpose, “Stupid” and Universal

In “Why Interfaces Don’t Work” Don Norman heavily criticizes the world of visible computers, visible interfaces and users busy with all this. Near the end of the text he suggests the source of the problem:

“We are here in part, because this is probably the best we can do with today’s technology and, in part, because of historical accident. The accident is that we have adapted a general-purpose technology to very specialized tasks while still using general tools.”19

In December 2011 science fiction writer and journalist Cory Doctorow gave a marvelous talk at the 28th Chaos Communication Congress in Berlin titled “The coming war on general computation”.20 He explains that there is only one possibility for computers to truly become appliances, the tiny, invisible, comfortable one purpose things Don Norman was preaching about: to be loaded with spyware. He explains,

“So today we have marketing departments who say things like ‘[…] Make me a computer that doesn’t run every program, just a program that does this specialized task, like streaming audio, or routing packets, or playing Xbox games’ […] But that’s not what we do when we turn a computer into an appliance. We’re not making a computer that runs only the “appliance” app; we’re making a computer that can run every program, but which uses some combination of rootkits, spyware, and code-signing to prevent the user from knowing which processes are running, from installing her own software, and from terminating processes that she doesn’t want. In other words, an appliance is not a stripped-down computer — it is a fully functional computer with spyware on it out of the box.”

By fully functional computer Doctorow means the general purpose computer, or as US mathematician John von Neumann referred to it in his 1945 “First Draft of a Report on the EDVAC” — the “all purpose automatic digital computing system”.21 In this paper he outlined the principles of digital computer architecture (von Neumann Architecture), where hardware was separated from the software and from this the so called “stored program” concept was born. In the mid 40’s the revolutionary impact of it was that “by storing the instructions electronically, you could change the function of the computer without having to change the wiring.”22

Today the rewiring aspect doesn’t have to be emphasized, but the idea itself that a single computer can do everything is essential, and that it is the same general purpose computer behind “everything” from dumb terminals to super computers.

Doctorow’s talk is a perfect entry point to get oneself acquainted with the subject. To go deeper into the history of the war on general computation you may consider reading Ted Nelson. He was the first to attract attention to the significance of the personal computer’s all-purpose nature. In 1974 in his glorious fanzine “Computer Lib” which aimed to explain computers to everybody, he writes in caps lock:

COMPUTERS HAVE NO NATURE AND NO CHARACTER

Computers are, unlike any other piece of equipment, perfectly BLANK. And that is how we have projected on it so many different faces.23

Some great texts written this century are “The Future of the Internet and How to Stop It” (2008) by Jonathan Zittrain and of course “The Future of Ideas” (2001) by Lawrence Lessig. Both authors are more concerned with the architecture of the internet than the computer itself but both write about the end-to-end principle that lies at the internet’s core — meaning that there is no intelligence (control) build into the network. The network stays neutral or “stupid”, simply delivering packets without asking what’s inside. It is the same with the von Neuman computer — it just runs programs.

The works of Lessig, Zittrain and Doctorow do a great job of explaining why both computer and network architectures are neither historic accidents nor “what technology wants”.24 The stupid network and the general purpose computer were conscious design decisions.

For Norman, further generations of hardware and software designers and their invisible users dealing with General Purpose technology is both accident and obstacle. For the rest of us the rise and use of General Purpose Technology is the core of New media, Digital Culture and Information Society (if you believe that something like this exists). General purpose computers and Stupid Networks are the core values of our computer-based time and the driving force behind all the wonderful and terrible things that happen to people who work and live with connected computers. These prescient design decisions have to be protected today, because technically it would be no big deal to make networks and computers “smart”, i.e. controlled.

What does it all have to do with “users” versus “people” — apart from the self evident fact that only the users who are busy with computers at least a little bit — to the extent of watching Doctorow’s video till the end — will fight for these values?

I would like to apply the concept of General Purpose Technology to users by flipping the discourse around and redirecting attention from technology to the user that was formed through three decades of adjusting general purpose technology to their needs: The General Purpose User.

General Purpose Users can write an article in their e-mail client, layout their business card in Excel and shave in front of a web cam. They can also find a way to publish photos online without flickr, tweet without twitter, like without facebook, make a black frame around pictures without instagram, remove a black frame from an instagram picture and even wake up at 7:00 without a “wake up at 7:00” app.

Maybe these Users could more accurately be called Universal Users or Turing Complete Users, as a reference to the Universal Machine, also known as Universal Turing Machine — Alan Turing’s conception of a computer that can solve any logical task given enough time and memory. Turing’s 1936 vision and design predated and most likely influenced von Neuman’s First Draft and All-purpose Machine.

But whatever name I chose, what I mean are users who have the ability to achieve their goals regardless of the primary purpose of an application or device. Such users will find a way to their aspiration without an app or utility programmed specifically for it. The Universal user is not a super user, not half a hacker. It is not an exotic type of user.

There can be different examples and levels of autonomy that users can imagine for themselves, but the capacity to be universal is still in all of us. Sometimes it is a conscious choice not to delegate particular jobs to the computer, and sometimes it is just a habit. Most often it is not more than a click or two that uncover your general purpose architecture.

For instance, you can decide not to use Twitter at all and instead inform the world about your breakfast through your own website. You can use Live Journal as if it is Twitter, you can use Twitter as Twitter, but instead of following people, visit their profiles as you’d visit a homepage.

You can have two Twitter accounts and log in to one in Firefox, and the other in Chrome. This is how I do it and it doesn’t matter why I prefer to manage it this way. Maybe I don’t know that an app for managing multiple accounts exists, maybe I knew but didn’t like it, or maybe I’m too lazy to install it. Whatever, I found a way. And you will do as well.

A Universal User’s mind set (it is a mind set, not set of rules, not a vow) means to liaise with hardware and software. Behavior that is antipodal to the “very busy” user. This kind of interaction makes the user visible, most importantly to themselves. And, if you wish to think about it in terms of Interface Design and UX, it is the ultimate experience.

Does this mean that to deliver this kind of user experience the software industry needs to produce imperfect software or hold itself back from improving existing tools? Of course not! Tools can be perfect.

Though the idea of perfect software could be revised, taking into account that it is used by the General Purpose User, valuing ambiguity and users’ involvement.

And thankfully ambiguity is not that rare. There are online services where users are left alone to use or ignore features. For example, the developers of Twitter didn’t take measures that prevent me from surfing from profile to profile of people I don’t follow. The Dutch social network Hyves allows their users to mess around with background images so that they don’t need any photo albums or instagrams to be happy. Blingee.com, who’s primary goal is to let users add glitter to their photos, allows to upload whatever stamps they want — not glittery, not even animated. It just delivers the user merged layers in return.

I can also mention here an extreme example of a service that nourishes the user’s universality — myknet.org — an Aboriginal social network in Canada. It is so “stupid” that users can re-purpose their profiles every time they update them. Today it functions as a twitter feed, yesterday it was a youtube channel, and tomorrow it might be an online shop. Never-mind that it looks very low-tech and like it was made 17 years ago, it works!

In general the WWW, outside of Facebook, is an environment open for interpretation.

Still, I have difficulties finding a site or an app, that actually addresses the users, and sees their presence as a part of the work flow. This maybe sounds strange, because all web 2.0 is about pushing people to contribute, and “emotional design” is supposed to be about establishing personal connections in between people who made the app and people who bought it, but I mean something different. I mean a situation when the work flow of an application has gaps that can be filled by users, where smoothness and seamlessness are broken and some of the final links in the chain are left for the users to complete.

I’ll leave you with an extreme example, an anonymous (probably student) project:

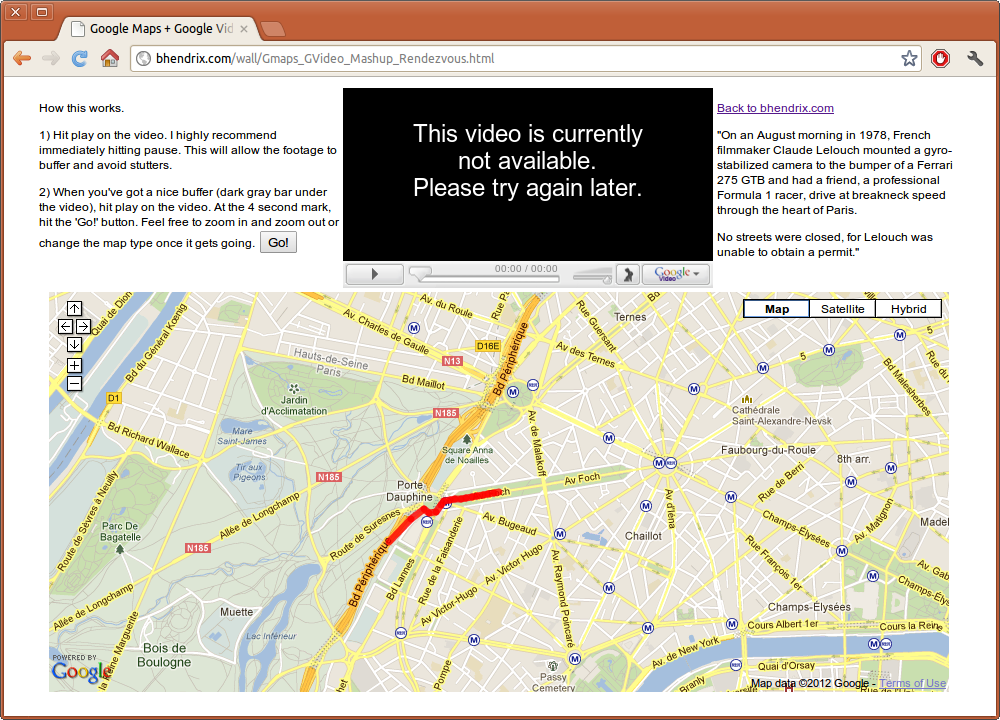

“Google Maps + Google Video + Mashup — Claude Lelouch’s Rendezvous”:

It was made in 2006, at the very rise of Web 2.025, when the mash-up was a very popular cultural, mainstream artistic form. Artists were celebrating new convergences and a blurring of the borders between different pieces of software. Lelouch’s Rendezvous is a mash up that puts on the same page the famous racing film of the same name and a map of Paris, so that you can follow the car in the film and see its position on the Google map at the same time. But the author failed (or perhaps didn’t intend) to synchronize the video and the car’s movement on the map. As a result the user is left with the instruction: “Hit play on the video. […] At the 4 second mark, hit the ‘Go!’ button.”

The user is asked not only to press one but two buttons! It suggests that we take care ourselves, that we make can complete a task at the right moment. The author obviously counts on users intelligence, and never heard that they are “very busy”.

The fact that the original video file that was used in the mash up was removed, makes this project even more interesting. To enjoy it, you’ll have to go to YouTube and look for another version of the film. I found one, which means you’ll succeed as well.

There is nothing one user can do, that another can’t given enough time and respect. Computer Users are Turing Complete.

* * *

When Sherry Turkle, Douglas Rushkoff and other great minds state that we need to learn programming and understand our computers in order to not be programmed and “demand transparency of other systems”26, I couldn’t agree more. If the approach to computer education in schools was to switch from managing particular apps to writing apps it will be wonderful. But apart from the fact that it is not realistic, I would say it is also not enough. I would say it is wrong to say either you understand computers or u are the user.27

An effort must be made to educate the users about themselves. There should be understanding of what it means to be a user of an “all purpose automatic digital computing system”.

General Purpose Users are not a historic accident or a temporary anomaly. We are the product of the “worse is better” philosophy of UNIX, the end-to end principle of the internet, the “under construction” and later “beta” spirit of the web. All these designs that demand attention, and ask for forgiveness and engagement formed us as users, and we are always adjusting, improvising and at the same time taking control. We are the children of the misleading and clumsy Desktop Metaphor, we know how to open doors without knobs.28

We, general purpose users — not hackers and not people — who are challenging, consciously or subconsciously, what we can do and what computers can do, are the ultimate participants of man-computer symbiosis. Not exactly the kind of symbiosis Licklider envisioned, but a true one.

Olia Lialina, October 2012

I would like to thank

Caitlin Jones for correcting my English and

Dragan Espenschied for designing this page.

Consider reading the folow up articles

Rich User Experience, UX and Desktopization of War

The morning after experience design.

Olia Lialina, 2015-01-03

Not Art&Tech

On the role of Media Theory at Universities of Applied Art, Technology and Art and Technology.

Olia Lialina, 2015-11-17

Once Again, The Doorknob

On Affordance, Forgiveness and Ambiguity in Human Computer and Human Robot Interaction.

Olia Lialina, 2018-06-09

Don Norman, “Why Interfaces Don’t Work”, in: Brenda Laurel (Ed.), The Art of Human-Computer Interface Design, 1990, p. 218 ↩

Apple Inc, Official Apple (New) iPad Trailer, 2012 ↩

Another strong force behind ignoring the term User comes from adepts of Gamification. They prefer to address users as gamers. But that’s another topic. ↩

-

See also Norman’s 2006 essay Words matter: “Psychologists depersonalize the people they study by calling them ‘subjects.’ We depersonalize the people we study by calling them ‘users.’ Both terms are derogatory. They take us away from our primary mission: to help people. Power to the people, I say, to repurpose an old phrase. People. Human Beings. That’s what our discipline is really about.” ↩

Lev Manovich, How do you call a person who is interacting with digital media?, 2011 ↩

Borrowed from the subtitle “You May Always Choose None of the Above” of the chapter “Choice” in: Douglas Rushkoff, Program or be Programmed, 2010, p.46 ↩

“The movie Tron (1982) marks the highest appreciation and most glorious definition of this term. […] The relationship of users and programs is depicted as a very close and personal one, almost religious in nature, with a caring and respecting creator and a responsible and dedicated progeny.” — Olia Lialina and Dragan Espenschied, Do you believe in users?, in: Digital Folklore, 2009 ↩

Vanevar Bush, As we may think (illustrated version, PDF facsimile), Life magazine, 1945 ↩

Thierry Bardini, Bootstrapping, 2000 ↩

J.C.R. Licklider, Joseph Carl Robnett, Man-Computer Symbiosis, IRE Transactions on Human Factors in Electronics, volume HFE-1, p.4-11, 1960 ↩

Alan Kay, Personal Dynamic Media, 1977, in: Noah Wardrip-Fruin and Nick Montfort (ed), The New Media Reader, MIT Press, 2003 ↩

See Douglas K. Smith and Robert C. Alexander, Fumbling The Future, 1999, p.110 (on Google Books) ↩

Alan Cooper, Robert Reimann, David Cronin, About Face 3: The Essentials of Interaction Design, 2007, p.45 ↩

Ted Nelson, Computer Lib/Dream Machines, Revised Edition 1987, p.9 ↩

Scanned from Computer Lib, page 3 ↩

See for example the trailers for Adobe Creative Suite 6, 2012 ↩

Douglas Rushkoff, Program or be Programmed, 2010, p.131 ↩

Vanevar Bush, As we may think (HTML version), The Atlantic Magazine, 1945 ↩

Don Norman, “Why Interfaces Don’t Work”, in: Brenda Laurel (Ed.), The Art of Human-Computer Interface Design, 1990, p. 218 ↩

John von Neumann, Introduction to “The First Draft Report on the EDVAC”, 1945 ↩

M.Mitchell Waldrop, The Dream Machine, 2001, p.62 ↩

Ted Nelson, Computer Lib/Dream Machines, Revised Edition 1987, p.37 ↩

See Kevin Kelly, What Technology Wants, 2010 ↩

Web 2.0 was supposed to be a complete merge of people and technology, but was again progressing alienation and keeping users and developers apart. People were driven from self-made home pages to social networks. ↩

“Politics is a system, complex to be sure, all the same. If people understand something as complicated as a computer, they will demand greater understanding of other things.” — Respondent’s statement, discussed in Sherry Turkle, The Second Self, edition 2004, p.163 ↩

“Instead of teaching programming, most schools with computer literacy curriculums teach programs [… ] The bigger problem is that their entire orientation to computing will be from perspective of users” — Douglas Rushkoff, Program or be Programmed, 2010, p.130 ↩

“Direct-manipulation systems, like the Macintosh desktop, attempt to bridge the interface gulf by representing the world of the computer as a collection of objects that are directly analogous to objects in the real world. But the complex and abundant functionality of today’s new applications — which parallels people’s rising expectations about what they might accomplish with computers — threatens to push us over the edge of the metaphorical desktop. The power of the computer is locked behind a door with no knob.” — Brenda Laurel, Computer as Theater, 1993, p. xviii ↩